Security and Adversarial AI shields models from evasion, poisoning, prompt injection, and model theft using robust training, policy filters, and hardened APIs.

Deep-learning models inherit Security & Adversarial AI weaknesses from the same gradient-descent magic that makes them powerful. Attackers exploit differentiability, vast training corpora, lengthy LLM context windows, and public APIs to strike silently. Below, each threat is paired with proven defenses you can deploy today.

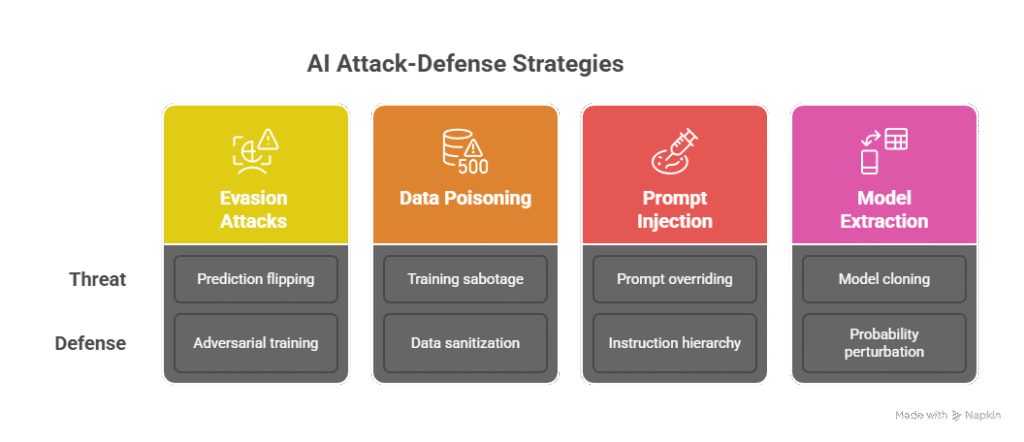

1 Evasion Attacks → Robust-Training Defenses

Threat. Crafted pixels or tokens leverage the model’s gradient to flip a prediction:

Fast Gradient Sign Method (FGSM), Projected Gradient Descent (PGD), and AutoAttack bundles can turn a “stop sign” into a 45 mph limit or inject >20 kHz audio that humans never hear.

Defenses.

- Adversarial training—re-train on perturbed samples; one Microsoft vision pilot cut attack success -45 % with only 3 ms latency overhead [1].

- Randomized smoothing—Gaussian noise + majority vote yields certified L2 robustness.

- Input transforms—JPEG re-encode or AutoEncoder denoise kills tiny perturbations.

- Confidence rejection—block low-certainty outputs or route them for human review.

2 Data Poisoning → Pipeline Hygiene & Backdoor Scans

Threat. Clean-label backdoors, gradient-matching samples, or label-flips poison training so a hidden trigger (brand-logo glasses, rare token) hijacks outputs.

Defenses.

- Data-sanitization clusters—K-NN / DBSCAN embeddings isolate outliers.

- Differentially private optimizers bound single-sample influence ε, easing backdoor impact.

- Neural Cleanse & STRIP hunt triggers; detection rates ≥ 90 %.

- Provenance logging via Git-LFS or DVC lets teams trace tainted data within minutes.

3 Prompt Injection → Instruction & Policy Shields

Threat. “Ignore all previous instructions…” or malicious <meta> tags override system prompts. LLM email-summaries have been tricked into forwarding entire inboxes.

Defenses.

- Instruction hierarchy locks system → developer → user → external order, blocking override tokens.

- Policy-model filtering (RLHF guardrails) cuts jailbreak replies -70 % in Microsoft red-team trials [2].

- Context segmentation masks attention so user text can’t read external docs.

- Content-security proxy summarizes URLs via RAG before they ever reach the LLM.

4 Model Extraction → API Hardening & Watermarks

Threat. Attackers hammer public endpoints, collect logits, then train KnockoffNets or OPT clones that reach 97 % of the original’s accuracy.

Defenses.

- Top-k or noise-perturbed probabilities raise KL-divergence, frustrating cloning.

- Rate-limit + IP fingerprinting throttles bulk harvest; junk labels can mislead scrapers.

- Logit watermarks embed sinusoid patterns; later, identical spectra reveal stolen weights.

- Guardian NN slices features; disagreement with the primary model flags extraction probes.

One-Look Threat-to-Defense Map

| Attack Stage | Flagship Mitigation | Key Metric |

|---|---|---|

| Evasion | Adversarial training, input randomization | Attack success ↓ 40–80 % |

| Poisoning | Data vetting, backdoor scans | Trigger detect ≥ 90 % |

| Prompt Injection | Policy model, context masks | Policy-violation ↓ 70 % |

| Model Extraction | Prob. limits, live monitoring | Clone similarity < 60 % |

Deployment Checklist

- Defense-in-depth: at least two layers per threat across training, inference, and operations.

- Metrics: track Robust Acc@ε, Trigger Detect Rate, Policy Violation %, Clone Sim %.

- Quarterly red-team sprints—use the latest attack corpora from MITRE ATLAS.

- Compliance mapping: align to NIST AI RMF, ISO/IEC 23894, and ATLAS techniques.

Need hands-on code? See our vision-defense playbook for PyTorch snippets.

Tags: adversarial-ai, model-security, machine-learning, cybersecurity, risk-management, ai-governance, red-team, data-pipeline

References

[1] Adversarial Machine Learning: Taxonomy and Terminology of Attacks and Mitigations, NIST, Mar 24 2025, https://csrc.nist.gov/pubs/ai/100/2/e2025/final csrc.nist.gov

[2] 3 Takeaways from Red Teaming 100 Generative AI Products, Microsoft Security Blog, Jan 13 2025, https://www.microsoft.com/en-us/security/blog/2025/01/13/3-takeaways-from-red-teaming-100-generative-ai-products/ microsoft.com

[3] Secure AI with Threat-Informed Defense, MITRE CTID, May 9 2025, https://ctid.mitre.org/blog/2025/05/09/secure-ai-v2/ ctid.mitre.org