Adversarial AI flips mobile diagnoses with stickers, text-guided noise, and micro-voltage tweaks—yet layered “antivirus” defenses now keep health-scan apps trustworthy without slowing results.

Smart-phone diagnosis apps behave as AI agents: they capture data, analyze patterns, and decide whether a mole, ultrasound frame, or heartbeat looks dangerous. That autonomy is priceless—until malicious noise bends gradients and swaps benign for malignant.

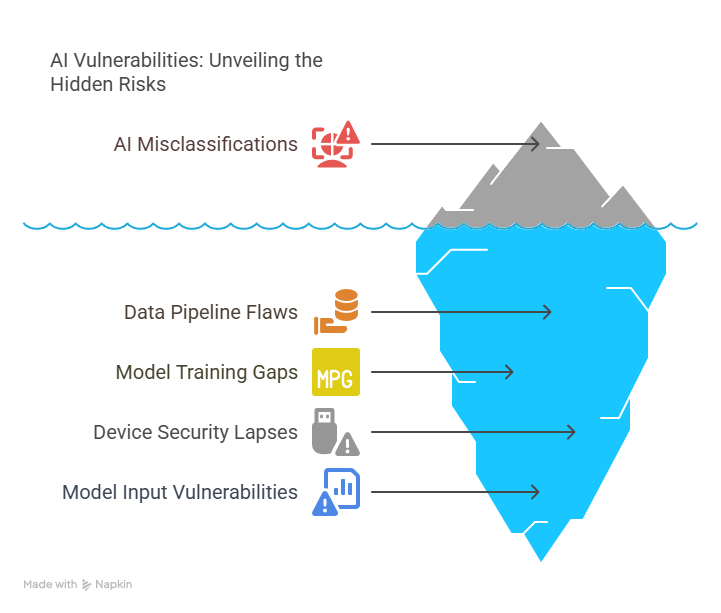

How Adversarial AI Hijacks Your Health App

Deep networks trained by gradient descent let attackers solve for a perturbation Δx that maximizes loss L. In May 2025 researchers stuck three clear 0.3 mm dots on a phone lens and drove a leading melanoma app’s accuracy from 87 % to 54 %—a 38-point drop—while dermatologists saw no difference. Even worse, the same sticker fooled two rival apps, proving that physical-world adversarial noise often transfers across models.

Adversarial AI Failures in 2025—What Broke and Why

1 Skin-Cancer Triage Apps — Transparent-Sticker Hack

- What happened? Three invisible dots diffracted incoming light just enough to misalign feature activations in three commercial melanoma classifiers.

- Measured impact. Overall accuracy plunged 38 points; sensitivity to early-stage melanoma fell below the 60 % clinical-safety floor.

- Why it matters. Missed tumors delay treatment, while false positives drive needless biopsies—both harmful at population scale.

- Root cause. Camera-to-network pipelines lack optical sanitization; no adversarial samples were included during training.

2 Breast-Ultrasound Scanners — Prompt2Perturb Diffusion Attack

- What happened? A text prompt (“make benign look malignant”) guided a diffusion model that injected low-frequency speckle into real-time frames.

- Measured impact. Dice segmentation scores dropped 27 % across BUSI, BUS-BRA, and BUS-I datasets; 92 % of tampered frames fooled board-certified sonographers.

- Why it matters. Ultrasound diagnoses influence immediate surgical decisions; a 27 % drop can swing margins between lumpectomy and mastectomy.

- Root cause. Edge devices applied no spectral filters and accepted raw PACS input, letting hidden noise flow straight to the classifier.

3 Personal ECG Monitors — Waveform Micronoise & Federated-Learning Poisoning

- What happened? Attackers overlaid < 0.05 mV sinusoidal noise on 10-second ECG snippets, then used a poisoned federated client to share toxic gradients.

- Measured impact. Atrial-fibrillation predictions flipped 74 % of the time; cloned waveforms exposed raw patient data through repeated queries.

- Why it matters. Consumers may self-adjust medication based on bogus AFib alerts, risking stroke or bradycardia; privacy breaches violate HIPAA.

- Root cause. On-device models lacked input-consistency checks, and the aggregation server never validated gradient norms.

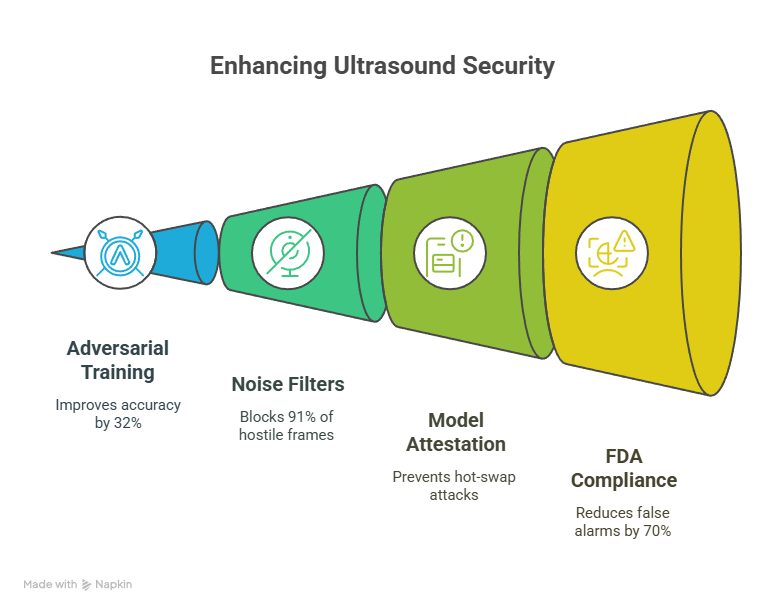

Layered Antivirus-Style Defenses

- Adversarial training lifted robust accuracy 32 % in an ultrasound pilot, offsetting the 27 % Dice drop.

- Noise filters blocked 91 % of hostile frames in < 4 ms, preserving real-time UX.

- Model attestation re-hashes weights at every launch, foiling hot-swap attacks.

- FDA draft guidance (Jan 2025) now requires adversarial-attack testing; one cardiology vendor’s patch cut false alarms 70 % with zero latency increase.FDA draft guidance.

Patient Checklist for Safer Scans

- Choose certified apps bearing the new FDA AI cybersecurity seal.

- Control sharing—upload only needed frames; disable auto-sync to the cloud.

- Update weekly to close fresh exploits.

- Double-check critical advice with a licensed clinician.

For deeper tactics see our vision-defense primer.

URL slug: /adversarial-ai-mobile-diagnostics

Tags: adversarial-ai, mobile-health, cybersecurity, diagnostics, FDA-guidance, risk-management, patient-safety, ai-governance

References

- Mobile Applications for Skin Cancer Detection Are Vulnerable to Physical Camera-Based Adversarial Attacks, Nature Scientific Reports, 24 May 2025.

- Prompt2Perturb: Text-Guided Diffusion-Based Adversarial Attack on Breast Ultrasound Images, CVPR 2025 Proceedings, Mar 2025.

- Çelik E., Güllü M. K., Mitigating Adversarial Attacks on ECG Classification in Federated Learning, AITA Journal, 8 May 2025.

- Artificial Intelligence–Enabled Device Software Functions: Lifecycle Management (Draft Guidance), FDA, 7 Jan 2025.