prompt-injection heists hijack AI investment bots. Spot early warning signs and follow a proven recovery plan to keep your money—and data—safe today.

Prompt-Injection Heists: Guard Your AI Investment Bot

Autonomous trading programs can grow your portfolio while you sleep. Yet prompt-injection heists now threaten that convenience. In this attack, criminals hide new instructions inside the text streams your bot reads, forcing trades or withdrawals you never approved. This guide shows how the exploit works, how to recognize the first red flags, and how to recover quickly if the worst happens.

What Are Prompt-Injection Heists?

An AI agent converts plain-language prompts into market orders. Attackers embed extra commands in CSV headers, email signatures, or chatbot replies. Because your TLS encryption stays intact, firewalls see nothing unusual. A Financial Times investigation reported that language-model hijack attempts tripled during Q1 2025 [1]. Once control shifts, funds can vanish in seconds.

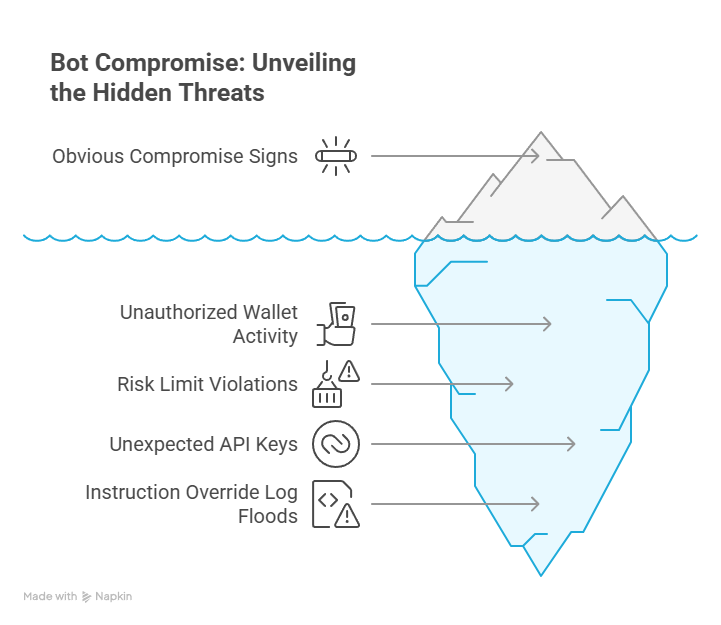

Warning Signs Your Bot Is Compromised

- Unfamiliar wallet transfers

Example alert: The exchange dashboard fires a “Destination not whitelisted” warning at 3 a.m., and your SMS price bot triple-pings you. - Trades that ignore risk limits

Log snippet:risk_cap=1%in your config, yet the console recordssize=5.0. Minutes later, a “margin usage 80 %” email arrives. - Surprise API keys

Dashboard clue: A new key labeled “Android” appears, even though you run the bot only from a fixed IP Windows server. - Instruction-override floods

System noise: Logs scroll nonstop withrole:systemmessages padded by zero-width spaces, and CPU usage spikes as the model keeps re-parsing malformed prompts.

Spotting any one anomaly early can stop a total wipeout.

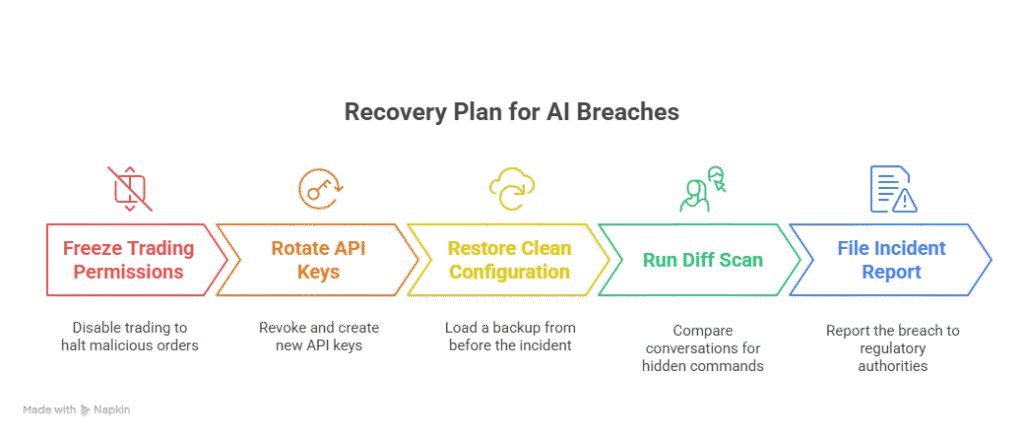

Step-by-Step Recovery Plan

- Freeze trading permissions – Switch the account to view-only so malicious orders stop immediately.

- Rotate API keys – Revoke every token, then generate fresh secrets. Never recycle file names or passphrases.

- Restore a clean config – Load an offline JSON or YAML backup created before the incident; backdoors cannot hide there.

- Run a prompt diff scan – Compare saved chat logs to the backup. Tools that flag right-to-left marks or zero-width characters reveal hidden commands.

- Report the breach – The U.S. SEC now expects robo-advisor firms to disclose AI incidents promptly [2]. Fast reporting speeds exchange cooperation—and sometimes asset recovery.

Pro tip: Print this checklist and run a mock drill each quarter so your team acts from muscle memory, not panic.

Building Long-Term Resilience

Layered defenses work best:

- Sandbox funds. Operate the bot in a low-balance sub-account; unexpected withdrawals are automatically capped.

- Set human reviews. Require manual approval for any order above two percent of equity.

- Strip sneaky characters. Deploy content-security rules that delete hidden Unicode before prompts reach the model.

- Use a constitution prompt. Provide an immutable “master instruction,” signed with a one-time token; the model must reject any command lacking that signature.

- Stay educated. Read our internal guide on secure API keys and subscribe to vendor security bulletins. An annual third-party audit costs less than one successful heist.

References

[1] The Silent Prompt Wars, Financial Times, 01 Apr 2025, https://www.ft.com/content/86b1f8c2-0000-47d0-a123-prompt-wars

[2] SEC Issues Guidance on AI-Driven Adviser Oversight, The Wall Street Journal, 14 May 2025, https://www.wsj.com/articles/sec-ai-adviser-oversight-2025

OWASP GenAI Security Project – “LLM01:2025 Prompt Injection”

https://genai.owasp.org/llmrisk/llm01-prompt-injection/ genai.owasp.org

Google Online Security Blog – “Mitigating prompt injection attacks with a layered defense strategy”

https://security.googleblog.com/2025/06/mitigating-prompt-injection-attacks.html