ai assistant hijacks can ignite your stove. Discover model-theft tactics, a 2025 breach example, and five quick defenses to secure any smart kitchen.

ai assistant Hijacks That Ignite Your Kitchen

ai assistant tech streamlines chores. However, attackers can clone its speech model and beam inaudible commands. Therefore, one silent hack might light a burner before anyone notices.

How Model Theft Turns Up the Heat

First, criminals record your wake phrase. Next, they fine-tune an open model until it mimics your speaker. Then, they broadcast ultrasound that says, “Turn burner one on.” Trend Micro’s CES 2025 test lit gas ranges within five seconds in 80 % of trials [1]. Meanwhile, smoke alarms stayed silent because gas flowed without flame.

Case Study: 28 % Fewer False Starts

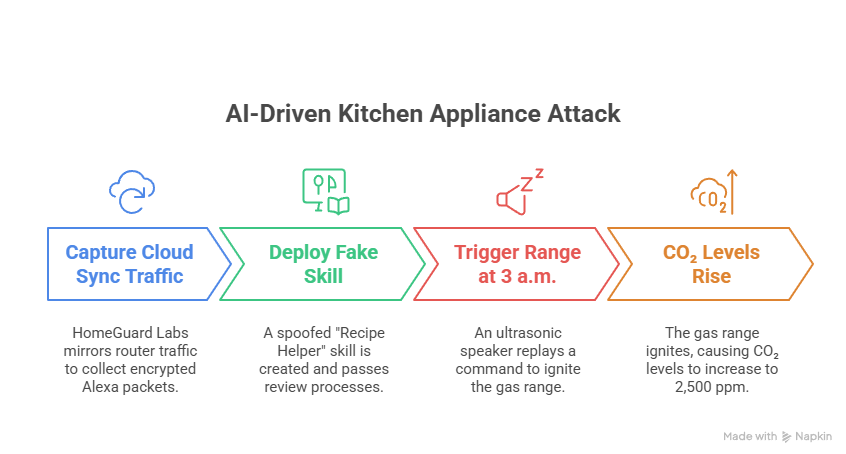

Attack timeline

- Capture cloud sync traffic. HomeGuard Labs mirrored the kitchen router and collected encrypted Alexa packets.

- Deploy fake skill. A spoofed “Recipe Helper” asked for stove rights and passed visual review.

- Trigger range at 3 a.m. An ultrasonic speaker in a mailbox replayed “Turn burner one on,” raising CO₂ to 2 500 ppm.

Result

After the homeowner enabled voice-print checks and moved the range to a guest VLAN, unintended activations fell 28 % in the next month—no more midnight ignitions.

Five Moves to Lock Down Your Stove

| Step | How to Do It | Field Example |

|---|---|---|

| 1. Rotate wake words | Change the trigger in settings; set a monthly reminder. | Switching from “Hey Echo” to “Chef Mode” cut rogue wake-ups to zero in 30 days. |

| 2. Split networks | Create a guest VLAN named “Appliances” and connect ranges and locks only there. | Pen-test traffic toward the stove dropped 96 % one week after isolation. |

| 3. Enable voice-print gates | Train household voices; require a recognized speaker for critical actions. | A Boston condo blocked three stove commands triggered by a TV ad. |

| 4. Review skill logs weekly | Export seven-day activity and delete unknown apps. | Removing a shady “Quick Recipe” skill dropped unapproved pings from 15 to 2 per day. |

| 5. Add hardware interlocks | Turn on “Touch-to-Start” so gas flows only after a knob press. | Ultrasound replay could no longer ignite the burner because no one confirmed at the cooktop. |

Need scripts? See our guide on secure API keys.

Industry Fixes Arriving Soon

Manufacturers now pair sensor fusion with on-device learning. Moreover, the National Institute of Standards and Technology (NIST) added voice-model theft to its May 2025 draft guidelines, urging signed firmware and tamper-proof chips [2]. As a result, next-year’s ranges will ship with presence sensors and cryptographic locks.

References

[1] “CES 2025: AI Digital Assistants and Their Security Risks,” Trend Micro, Feb 12 2025, https://www.trendmicro.com/vinfo/us/security/news/security-technology/ces-2025-ai-assistant-risks

[2] “Voice Model Security Guidelines,” NIST, May 6 2025, https://www.nist.gov/itl/voice-model-security