Adversarial attacks on traffic-AI can flip a stop sign into “go.” Spot the risks to self-driving systems and learn practical steps to keep everyday drivers safe.

Adversarial Attacks on Traffic-AI: When Stop Means Go

Autonomous driving cameras read road signs in milliseconds. However, adversarial attacks on traffic-AI can trick them into seeing a red “STOP” as “SPEED LIMIT 45.” Consequently, one silent error may cause a very loud crash.

What Are Adversarial Attacks on Traffic-AI?

Typically, attackers design small sticker-like patches that nudge an AI agent toward the wrong class. Then they print the patch on vinyl and, under cover of darkness, place it on a real sign. Field tests in March 2025 raised misclassification rates from 2 % to 78 % [1]. Because Transport-Layer Security still works, firewalls stay quiet; therefore, the exploit thrives in daylight, rain, or glare.

Why It Matters to Human Drivers

First, false readings cascade. For example, adaptive cruise control may surge when it “sees” a higher speed limit. Second, insurance disputes explode because drivers struggle to prove whether a sensor or a person made the mistake. Moreover, the U.S. National Highway Traffic Safety Administration (NHTSA) flagged sign spoofing as a top safety risk in its April 2025 framework [2]. Finally, public trust erodes; indeed, a few viral videos can stall technology adoption even though overall crash rates drop.

Implications for Fully Autonomous Vehicles

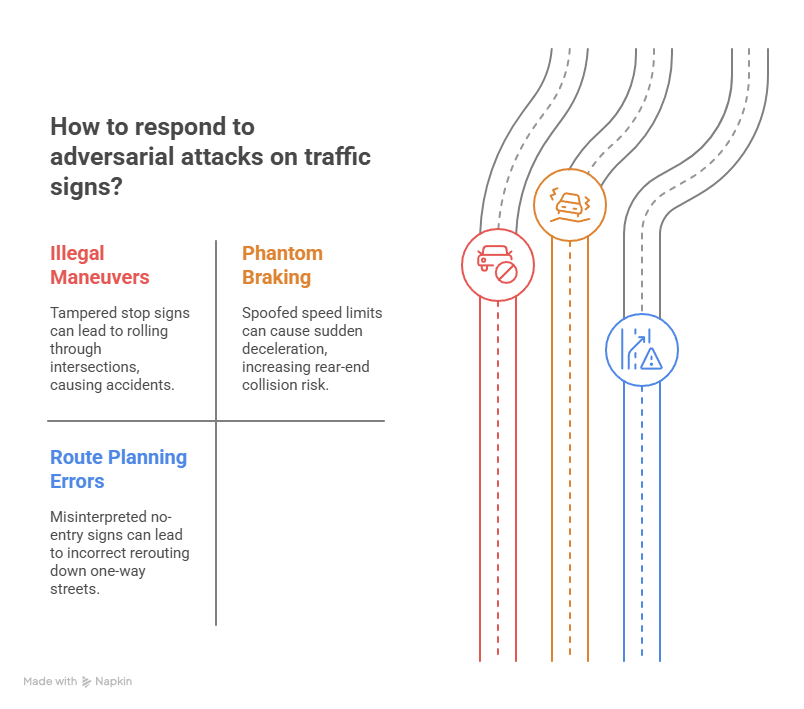

Fully self-driving cars rely less on humans and more on perception stacks. Thus, manipulated signs or projected light patterns can create three major hazards:

- Force illegal maneuvers: A tampered stop sign at a four-way intersection may fool the car into rolling through.

- Trigger phantom braking: A spoofed speed-limit drop can cause sudden deceleration, which, in turn, invites rear-end collisions.

- Break route planning: If the AI reads a no-entry sign as “detour ahead,” it might reroute down a one-way street.

Meanwhile, distracted fallback drivers may read email instead of watching the road, so the margin for recovery nearly disappears.

Defense Strategies Taking Shape

Manufacturers respond on three fronts. First, sensor fusion cross-checks camera output against LiDAR and HD-map data; whenever readings conflict, the car asks the driver to confirm. Second, adversarial training feeds thousands of spoofed images into the model and teaches it to ignore malicious patterns. Third, real-time sign authentication embeds invisible retro-reflective watermarks, and missing marks immediately suggest tampering. For deeper technical advice, review our internal post on secure API keys.

Driver & Owner Safety Checklist

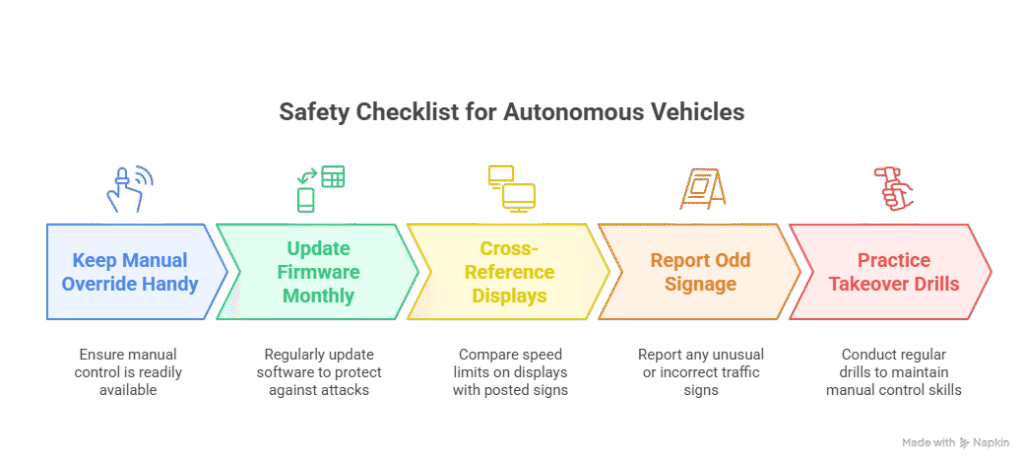

Therefore, owners can boost safety with this quick checklist:

- Keep manual override handy. Disable auto-acceleration above 30 mph unless you visually confirm the sign.

- Update firmware monthly. Patches often harden the vision model against new attacks.

- Cross-reference displays. If dash and mobile-map limits disagree, trust the posted sign, not the screen.

- Report odd signage. Most vehicles let you send GPS and a photo to your local DOT in two taps.

- Practice takeover drills. Run a five-minute manual-control drill each month so muscle memory stays fresh.

References

[1] “Simulated Adversarial Attacks on Traffic Sign Recognition,” MDPI Sensors, Apr 2025, https://www.mdpi.com/2673-4591/92/1/15

[2] “NHTSA Issues New Policies on Autonomous-Vehicle Safety,” U.S. Department of Transportation, Apr 24 2025, https://www.transportation.gov/av-safety-2025